Building a Free, Open-Source Auto-Blogger: Generating and Publishing Content with AI

Hey there, SaaS hackers! 👋 I'm sure you are here for a reason. We might have been in the same boat here and you also want a solution to boost your SEO by creating blogs. You might be tired of watching your competitors climb the search rankings while your awesome product sits on page 2 (or worse)? Well, buckle up, because we're about to change that.

In this tutorial, we're diving into the world of AI-powered content creation to boost your SEO game. We're not talking about churning out nonsense stuffed with keywords - nope, we're going to create a smart auto-blogger that pumps out content that's both search-engine friendly AND actually useful for your readers. The best of both worlds!

What's the Big Idea?

Picture this: You're focusing on other important things for your SaaS, and your auto-blogger is already hard at work, crafting blog posts tailored to your SaaS niche. By the time you wake up every morning, you've got fresh, SEO-optimized content ready to publish. Sounds like a dream, right? Well, we're about to make it a reality.

Here's what we're building:

- An AI-powered content generator using Google's Gemma2 model (via Ollama)

- A smart system to weave in your target keywords naturally

- An auto-publishing setup to post directly to your Ghost blog

Why Should You Care?

- SEO on Autopilot: Keep your blog fresh with minimal effort, signalling to search engines that your site is active and relevant.

- Scale Your Content Strategy: Produce more content in less time, covering a wider range of topics related to your SaaS.

- Stay Ahead of the Curve: While your competitors are burning the midnight oil writing blogs, you'll be focusing on what really matters - improving your product.

What We'll Cover

- Setting the Stage: We'll get our hands dirty with the tech setup - don't worry, I'll walk you through it step-by-step.

- The Brain of the Operation: We'll dive into how to use AI to generate content that doesn't sound like it was written by a robot.

- Keyword Magic: Learn the secret sauce of integrating keywords without setting off Google's spam alarms.

- Publishing Like a Pro: We'll set up automatic publishing to Ghost, so your content goes live without you lifting a finger.

- Interlinks: We'll interlink our blog posts for better SEO.

Ready to give your SaaS the SEO boost it deserves? Let's jump in and start building your new secret weapon!

Let's create an auto-blogger

Before starting, you'll need a few things:

- Ghost deployed on any VM: I have a self-hosted ghost instance. You can either self-host or use the paid instance.

Install Ghost

docker run -d --name some-ghost -e NODE_ENV=development -e url=http://some-ghost.example.com ghost

- Ollama: We don't need Ollama in the cloud. You can run this locally. Ollama works on CPU-only machines as well. If you have GPU then awesome else content generation will be a bit slow for you.

Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

Both of them are super easy to install. There is a one-command install script for both of them. You can either deploy both in your VM if you have one or for testing you can do it locally.

- Ghost Admin API Key: You'll need an API to interact with the ghost admin. You can easily grab it from your ghost instance.

Generate content using Ollama to generate content

This is the most interesting part. Let's pull the gemma2 model in Ollama.

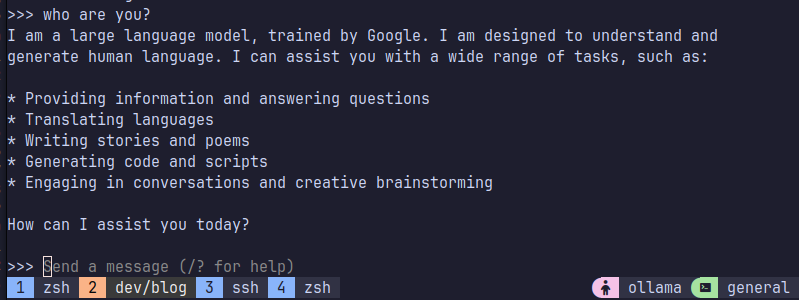

ollama pull gemma2This will download the gemma2 model for you. It's that simple. Now, you can start to interact with it if you want.

ollama run gemma2

So easy right?

Now, let's use it to generate our blog content. You'll have to give some context to your SaaS.

CONTEXT = """<introduction about your SAAS platform here"""

After context, let's work on the actual prompt for our LLM.

PROMPT_TEMPLATE = f"""Here is a context about <your-saas-name-here>: {CONTEXT}.

Write an SEO-friendly catchy article about {content_theme} using dreamery in a news magazine style of more than 1000 words. Strictly follow the following JSON format and include all those keys as well for each section:

```{{

"title": "The title of the blog post",

"summary": "A brief summary of the blog post",

"introduction": "The introduction paragraph(s)",

"main_content": [{{

"subheading": "Content for subheading",

"content": "Content for subheading"

}},

{{

"subheading": "Content for subheading",

"content": "Content for subheading"

}}],

"Conclusion": "The conclusion paragraph(s)",

"tags": ["tag1", "tag2", "tag3"]

}}```

"""As you can see, here we are asking our LLM to generate content for our SaaS given the context. We have also asked to follow the strict template so that we get a JSON output with the required keys. We will parse these keys in the later process to create our Lexical JSON content for our blog post.

content_theme here is an optional thing. I have different themes that I randomly pick to generate content based on that. For example: content for a professional headshot theme, a beauty professional theme, or a cartoon theme.

Cool beans 🫘

Now that we have a prompt, we'll now create a function to generate content based on that prompt.

# .env file

OLLAMA_API_URL = "http://localhost:11434/api/generate" # assuming you have it running in your localdef generate_content(prompt, seed):

payload = {

"model": "gemma",

"prompt": prompt,

"stream": False,

"options": {

"seed": seed,

},

}

response = requests.post(OLLAMA_API_URL, json=payload)

if response.status_code == 200:

return response.json()["response"]

else:

raise Exception(f"Failed to generate content: {response.text}")And that's the final piece to generate content. 🥳

Now, we'll look into parsing and publishing this content.

Ghost Adming API clients to publish content

Interacting with ghost admin API is easy. Let's create some helper functions to retrieve and publish blog content.

# .env file

GHOST_API_URL = "https://<your-domain>/ghost/api/admin/"

GHOST_ADMIN_API_KEY = "<your-api-key>"

OLLAMA_API_URL = "http://localhost:11434/api/generate" # assuming you have it running in your localThis is our env file with all the required data that we created earlier. We'll add more as we move on.

API key that we get from Ghost has 2 parts. We have to use them to generate a JWT token. Here is a function for that.

import jwt # pip install pyjwt

def generate_jwt():

id, secret = GHOST_ADMIN_API_KEY.split(":")

iat = int(date.now().timestamp())

header = {"alg": "HS256", "typ": "JWT", "kid": id}

payload = {"iat": iat, "exp": iat + 5 * 60, "aud": "/admin/"}

token = jwt.encode(

payload, bytes.fromhex(secret), algorithm="HS256", headers=header

)

return tokenNow, let's create a function to create a post. Here we will prepare a payload for the ghost /posts/ endpoint and also use that generate_jwt for token authentication.

def parse_json_content(content):

try:

return json.loads(content)

except json.JSONDecodeError as e:

logger.error(f"Failed to parse JSON content: {e}")

return {}

def create_ghost_post(parsed_content, image, interlinking_map=None):

url = f"{GHOST_API_URL}posts/"

lexical_content = create_lexical_json(parsed_content, interlinking_map)

data = {

"posts": [

{

"title": parsed_content["title"],

"lexical": lexical_content,

"excerpt": parsed_content.get("summary", ""),

"tags": [{"name": tag} for tag in parsed_content["tags"]],

"status": "published",

"authors": ["<your-author>"],

"feature_image": image["images"][0]["url"],

}

]

}

headers = {

"Authorization": f"Ghost {generate_jwt()}",

"Content-Type": "application/json",

"Accept-Version": "v3.0",

}

response = requests.post(url, json=data, headers=headers)

if response.status_code == 201:

return response.json()

else:

raise Exception(f"Failed to create post: {response.text}")Let's break it down. create_ghost_post is expecting a paresed_content, an image and an interlinking_map

- parsed_content is a JSON content that we generate using LLM

- image is the featured image that we want in our blog to make it pretty.

- and interlinking_map is a mapper of internal blog post URLs.

We will revisit all these again.

Here, create_lexical_json function is the important one. This is the function that formats your blog content. It's going to be a huge block of code so I'll attach this at the end of this post.

Let's create a function to upload an image to Ghost.

def upload_image_to_ghost(image_path):

url = f"{GHOST_API_URL}images/upload/"

headers = {

"Authorization": f"Ghost {generate_jwt()}",

"Accept-Version": "v3.0",

}

filename = os.path.basename(image_path)

with open(image_path, "rb") as image_file:

files = {"file": (filename, image_file, "image/png")}

data = {"purpose": "image", "ref": image_path}

response = requests.post(url, headers=headers, files=files, data=data)

response.raise_for_status()

return response.json()You'll need a folder of images that you want to feature in your blog post.

You can randomly pick an image from your folder and pass that path to this function like this:

random_image = random.choice(images)Sweet. 🍬

That's all the pieces we need. 🎉 Now, you can arrange all this in your python file and run it however you want. I have created a cron job and scheduled the python code to run daily 😎 You can use it according to your preference.

Thanks for reading! *️⃣

Here is the snippet for creating Lexical JSON content. WARNING!!! this is very hacky but you should get the idea 😅

def create_lexical_json(parsed_content, interlinking_map=None):

lexical_content = {

"root": {

"children": [],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "root",

"version": 1,

}

}

# Add summary

if "summary" in parsed_content:

lexical_content["root"]["children"].append(

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": parsed_content["summary"],

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "paragraph",

"version": 1,

}

)

# Add introduction

if "introduction" in parsed_content:

lexical_content["root"]["children"].extend(

[

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": "Introduction",

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "heading",

"tag": "h2",

"version": 1,

},

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": parsed_content["introduction"],

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "paragraph",

"version": 1,

},

]

)

# Add main content

for section in parsed_content.get("main_content", []):

lexical_content["root"]["children"].extend(

[

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": section["subheading"],

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "heading",

"tag": "h2",

"version": 1,

},

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": section["content"],

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "paragraph",

"version": 1,

},

]

)

# Add conclusion

if "conclusion" in parsed_content:

lexical_content["root"]["children"].extend(

[

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": "Conclusion",

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "heading",

"tag": "h2",

"version": 1,

},

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": parsed_content["conclusion"],

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "paragraph",

"version": 1,

},

]

)

if interlinking_map:

interlink_children = []

for title, url in interlinking_map.items():

c = 1

interlink_children.append(

{

"children": [

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": title,

"type": "text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "link",

"version": 1,

"rel": "noopener",

"target": "_blank",

"url": url,

},

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "listitem",

"version": 1,

"value": c,

}

)

c += 1

lexical_content["root"]["children"].extend(

[

{

"children": [

{

"detail": 0,

"format": 0,

"mode": "normal",

"style": "",

"text": "Read more about <your-saas-name>",

"type": "extended-text",

"version": 1,

}

],

"direction": "ltr",

"format": "",

"indent": 0,

"type": "heading",

"tag": "h2",

"version": 1,

},

{

"children": interlink_children,

"direction": "ltr",

"format": "",

"indent": 0,

"type": "list",

"version": 1,

"start": 1,

"tag": "ol",

},

]

)

return json.dumps(lexical_content)

This will format your blog content and add interlinks as well. You can view the live example here: https://blog.dreamery.ai/

Also please consider checking out my app Dreamery. Thanks! 🤗

Comments ()